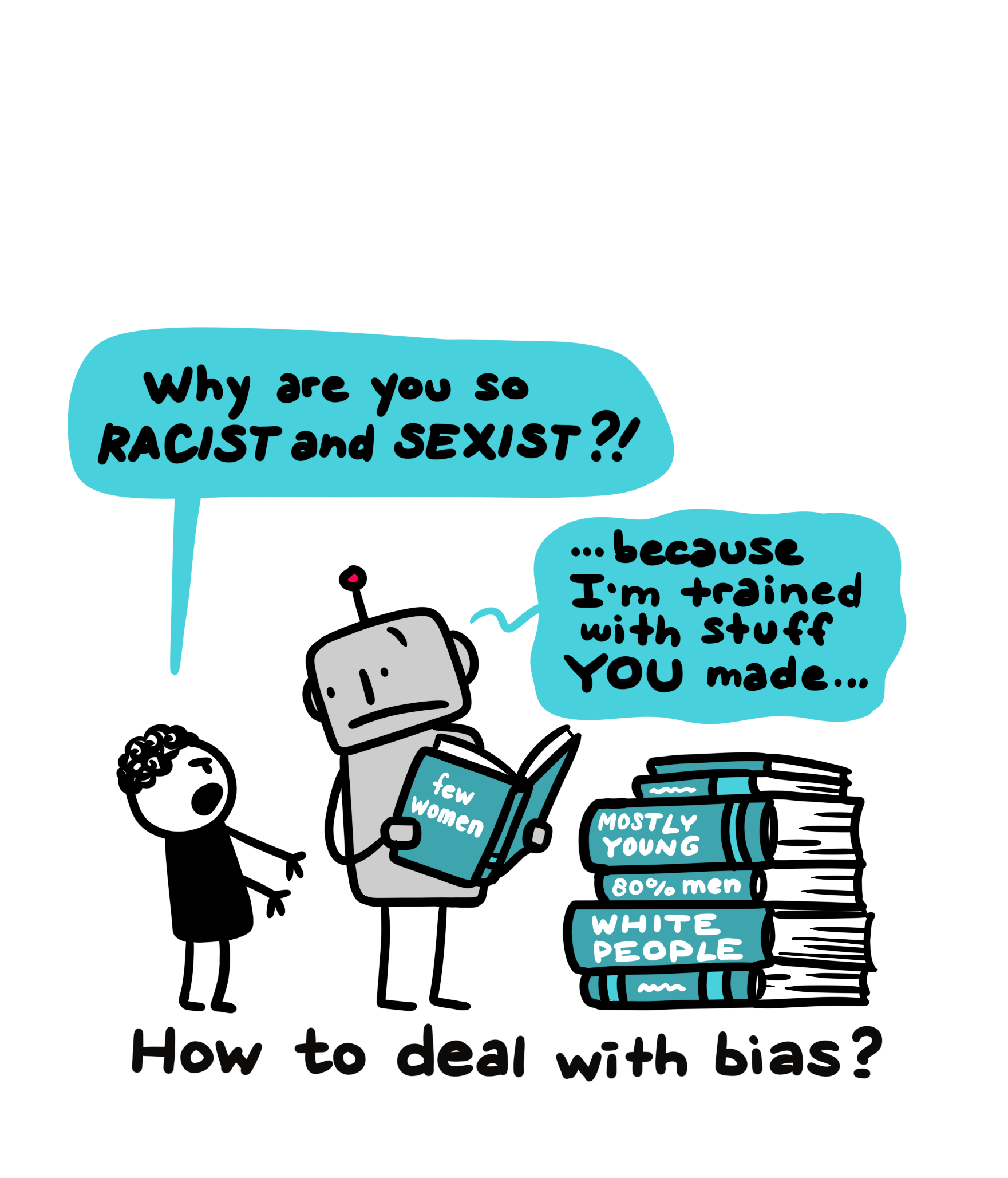

Bias and what to do with it

Through (learning) language, Large Language Models also absorb representations of the culture and society which that language and those texts describe and express. We are often upset when faced with biased outputs of chatbots, such as stereotypical associations of genders and professions, but we should remember that these are the product of societal stereotypes which are conveyed by what and how we write. Are we seeing our (biased) culture and society in a mirror and are quite a bit embarrassed by this? One question to ask is: should AIs then be “cleaned” (or, more technically, “debiased”)? If so, who decides which biases should be mitigated or removed? And are we sure these strategies would work?