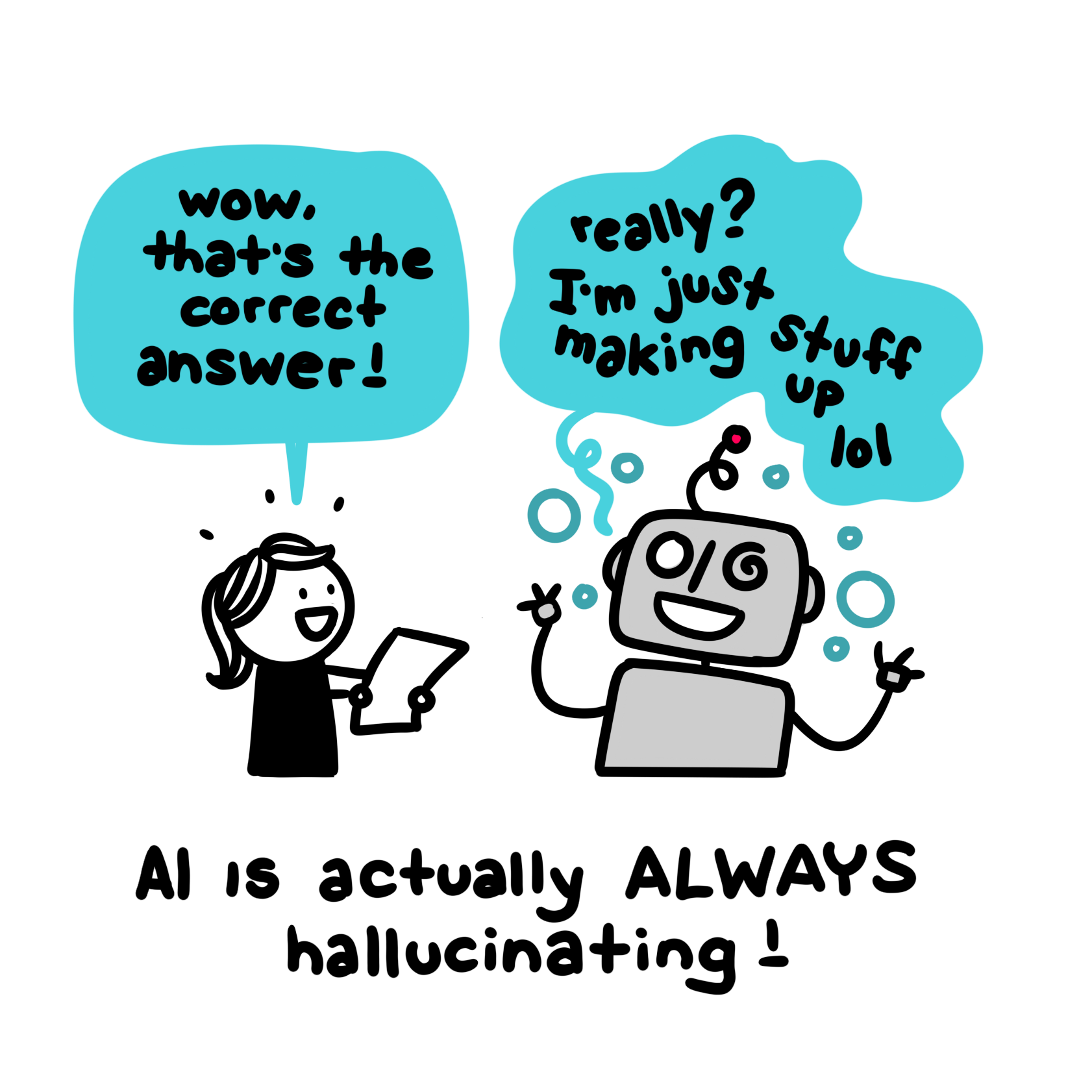

So-called hallucinations are the natural specialty of ChatGPT

Large Language Models are trained to predict (one of) the most probable next word(s) given a sequence of words. In other words (ah ah): to generate language. Therefore, they always make up sentences, it’s their job! Simply because they have seen a lot (A LOT!) of language data describing facts, it happens most often than not, especially for common knowledge events, that when generating the most probable sequence, this sequence is also “correct” in the sense of factually sound. But be careful when trusting what models say, even when they sound very very confident!