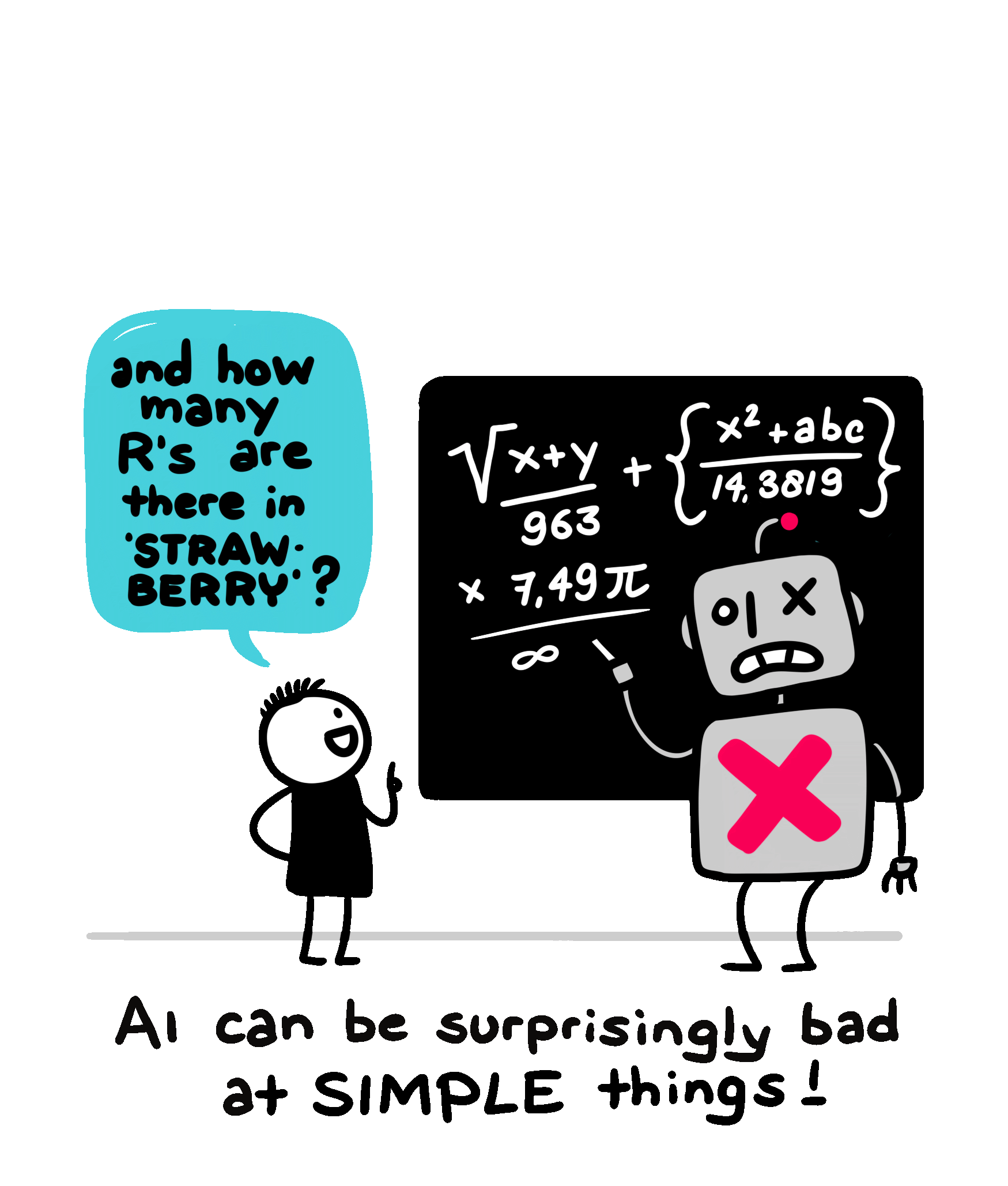

AI can be bad at simple things

Some tasks that appear very easy to us, such as counting how many letters of one kind there are in a given word, or telling its length in characters, pose big challenges to Large Language Models. This is because these tasks are not explicit in their training, which is instead make models predict the most likely next word in a sentence, and models are trained on the basis of portions of words, not characters. Also, in general, while LLMs acquire some counting abilities, they are not that good at calculations (even though at times it might seem so!)